If you’re an engineer, you’ve probably had the experience of trying to set up or maintain a local development environment. And while I could be wrong, I’d be willing to bet that, more often than not, it was…not a great experience.

At a previous company, when I was just starting out writing code, a brilliant senior engineer spent multiple hours on a call with me summoning arcane CLI commands to try to get my environment up and running so I could contribute to a project. We finally succeeded, but then it broke the following week in a new and confusing way when something changed. It needed more painful TLC before I could get to the actual thing I wanted to do, which was not, in fact, maintaining a local development environment, but shipping code to deliver value to customers.

At Help Scout, our developer experience team has made huge progress in recent years to improve what was already a pretty good local setup (you’ll hear more about their important work in future posts!). These days, most of the time, my dev environment just works, and when it doesn’t (usually because I haven’t updated it recently enough), I can generally figure out why, unblock myself, and get back to work.

But then I’m a software engineer, a type of person who, relative to the population at large, has a much higher tolerance for throwing spaghetti at the wall of a problem I don’t really understand. I scan through old Stack Overflow threads for relevant morsels of information, interact with Docker and brew and bash profiles, and, you know, generally turn it off, try something else, and turn it on again (and again and again and again) until something changes.

Not everyone wants to be a hacker

At most companies, though, there are a broader group of stakeholders — designers, product managers, QA specialists, support team members — who would benefit from the ability to test development code but who realistically (and reasonably!) are probably never going to want to deal with the hassle of configuring and maintaining a dev environment.

There are different ways to handle the problem of getting code to these folks before it goes to customers. One classic approach is to ship the code to production behind a feature flag, which you can then enable selectively for the folks on your team who want to test it out. This is great in terms of simplicity of access — these stakeholders just need to log into specific accounts in the app they already use — but it really limits agility and iteration because each change requires a new Jira card, PR, review, CI pass, and deployment before the new code shows up behind the feature flag.

The hot new approach to this problem is cloud-based environments, the shiny successors to staging servers. With this, you get the consumer simplicity of testing in production (just go to this URL and have at it!) without actually having to ship to production, making iteration cycles way faster. We’re experimenting with this paradigm and have had some good experiences, but then we get to another problem, which is how precisely a local environment can reflect production. I think everyone who’s had trouble setting up a dev environment has probably also had the experience of getting something working locally and then, after merging and deploying it, finding out that it doesn’t quite work the same way in production.

Standing on the shoulders of giants

I felt these two problems in my work and could understand how they were holding things back, but, for a while, I didn’t really know what to do about them. As I onboarded and got more context about Help Scout’s architecture and engineering culture (usually by searching Slack to try to figure out something I didn’t understand), I would occasionally see references to usage of an older Help Scout project called ProxyPack, so one day, led by curiosity, I decided to look into it.

From an archived GitHub repo, I learned that ProxyPack was an internally-developed Webpack plugin that was used to make it possible to run local development JS code against our production app’s backend (a PHP monolith supported by a network of Java services). It wasn’t accessible to everyone and still required some local setup and terminal manipulation (including setting up a spoofed SSL cert), but QA engineers I talked to who had been around when it was in use said it was really useful for them in terms of saving time and having more confidence in their testing. However, it was apparently difficult to maintain (again, you had to deal with SSL certs!) and after the developer who built it left, it eventually fell into obsolescence.

Learn Something Day

I’ll be honest that my personal joy in life is not Webpack configuration (is it anyone’s?), so I didn’t consider trying to revive ProxyPack itself. But the core idea was really intriguing! Help Scout encourages employees across the organization to take regular Learn Something Days where they step away from their normal duties and focus on learning things that will help them (and by extension, Help Scout) grow. This can take a variety of forms: taking courses, attending conferences, and spiking out prototypes and proofs of concept (POCs).

My favorite way to learn is by doing, so I always use these days to hack on ideas. While I’m not great with Webpack, what I do actually know a lot about is Chrome extensions. In fact, part of the reason I work at Help Scout is that in my previous job, I built Chrome extensions on top of it to customize the app to my team’s specific needs. So the next time I had a Learn Something Day I started to noodle around with an idea: Could I get the same end-user outcome of ProxyPack — the ability to easily test dev code against production — through an extension?

Bundles of joy

My inspiration came when I was looking at the Network tab to examine an API response in production against the one I was getting in my local environment. While I was scanning through the list, I accidentally clicked on one of the JavaScript bundle requests rather than the API response I was looking for. Like many apps, we store our JS code in a distributed CDN for the fastest possible access by users no matter where they are. Let’s say the bundle URL for one of the SPAs that make up our app looks like this:

https://helpscoutblog.cloudfront.net/frontend/inbox-settings.js

An idea started to develop in my mind. I switched to the other browser where I was running the local environment. The local dev environment builds the JS code and runs it on a simple nginx server that’s used in lieu of the CDN.

https://localenv.helpscout.com/frontend/inbox-settings.js

I had a eureka moment — what if, while using the production app, I could programmatically intercept requests to the production versions of the bundles and redirect them to the local versions of the same files?

Declaration of independence

Chrome extensions have a variety of superpowers not granted to standard web apps. One of the most powerful of those is the ability to interact with HTTP requests made in a user’s browser. The traditional mechanism for doing so was the webRequest API, which let you register a callback for HTTP requests and allowed you to listen in on them or, with the blocking option, intercept and modify them (URL/headers/request body) while they’re in flight.

The webRequest API would have made implementing my idea very straightforward, and I started with that as a proof of concept, but then I learned that the blocking option for webRequest is in the process of being deprecated. However, Chrome has provided a successor to webRequest, the declarativeNetRequest API.

This API is much tighter in terms of what it allows you to do to requests (and presumably its declarative nature also makes it easier for Chrome’s app reviewers to automate scans for harmful extension behavior). Rather than having full control to imperatively modify the requests yourself in your extension code with webRequest, you register rules (which particular requests to do things to) and actions (what things to do) according to a limited JSON schema and then the browser itself handles all of that externally (and more securely) instead of within your extension code.

Request…intercepted

At the root of a Chrome extension’s code is a manifest requesting the permissions your extension needs and listing the location of various assets in your app so it knows where to run your code. Here’s a minimal manifest for our use case, claiming the declarativeNetRequest permission and directing the extension to where our rule is registered:

// manifest.json

{

"name": "local proxy experiment",

"version": "1.0",

"manifest_version": 3,

"description": "Proxies hs-app-ui JS requests to hs-stack bundles",

"host_permissions": ["<all_urls>"],

"declarative_net_request": {

"rule_resources": [

{

"id": "proxy",

"enabled": true,

"path": "proxyRule.json"

}

]

},

"permissions": [

"declarativeNetRequest",

]

}I started out by hardcoding a rule for one file:

// proxyRule.json

{

"id": 1,

"priority": 1,

"action": {

"type": "redirect",

"redirect": {

"url": "https://localenv.helpscout.com/frontend/inbox-settings.js"

}

},

"condition": {

"urlFilter": "https://helpscoutblog.cloudfront.net/frontend/inbox-settings.js",

}

}As you can see, this rule has a urlFilter condition so that when a request is made for the production bundle, it redirects it to the local version. I activated the extension, made a change, and refreshed the page, and it worked — I could see my local dev code in the production app!

We split our bundles by route to reduce unnecessary code loading, though, and I didn’t want to have to hardcode all of them and then need to update the extension any time we added a new one. declarativeNetRequest has an option to help handle that, thankfully — you’re able to use regexes both for setting conditions and for modifying the resulting URL. That sounds scary (OK, regexes always sound scary to me!), but it’s really not that bad. Here’s the updated version:

// proxyRule.json

{

"id":1,

"priority": 1,

"action": {

"type": 'redirect',

"redirect": {

"regexSubstitution": 'https://localenv.helpscout.com/frontend/\1',

},

},

"condition": {

"regexFilter": 'https://helpscoutblog.cloudfront.net/frontend/(.*)'',

},

}What’s happening here is that in the condition, we’re capturing the filename with (.*) then using the numbered capturing group \1 to append that to the end of the URL we’re redirecting to. With that setup, I could move through various apps in Help Scout, make changes locally, refresh the tab, and see my changes “in production.” I shared this POC (which, in tribute to ProxyPack, I named ProxyPal) with the team and started using it in my own work; several engineers and QA folks also reported finding it helpful.

Expanding the user base

I was happy with what I had built, but it didn’t fully handle my intended use case. It helped for more technical QA folks (and was also great for engineers testing bug fixes in development against the production app and data where the bug was occurring), but you still needed to maintain a local git repo and run the dev server, so it wasn’t as universally accessible as I wanted it to be.

Luckily, my teammate Maxi Ferreira is a Cloudflare expert (as well as a great newsletter writer), and he helped me use that service to make ProxyPal available to everyone on the team. Here’s what we did:

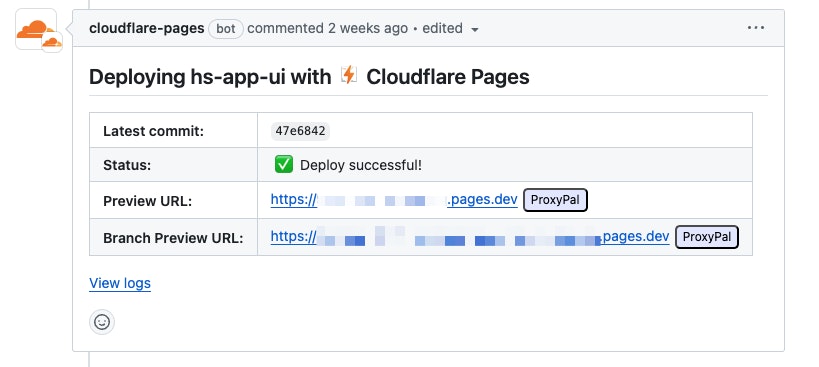

On push to a PR branch, we built a copy of the JS assets and published it to Cloudflare Pages.

Using Cloudflare’s awesome built-in GitHub integration, every PR includes an auto-generated comment with a link to that build.

The extension does a little extra magic using a content script that injects a button next to the Cloudflare comment:

// github.js

import './github.css';

const getPrTitle = () => {

return document.querySelector('.gh-header-title').innerText;

};

function handleLinkInjection() {

// Function to inject the link

function injectLink(aTag) {

// Check if the href contains 'pages.dev'

if (aTag.href.includes('pages.dev') && aTag.href !== 'https://pages.dev/') {

// Create the new link element

var newLink = document.createElement('button');

const prTitle = getPrTitle();

newLink.textContent = 'ProxyPal'; // Set link text

newLink.className = 'proxyPalButton';

newLink.onclick = () => {

chrome.runtime.sendMessage({

type: 'SET_ACTIVATION_STATUS',

value: {

status: true,

envUrl: aTag.href,

envDescription: prTitle,

},

});

alert(`Now proxying to ${prTitle} at ${aTag.href}`);

};

// Insert the new link after the existing <a> tag

aTag.parentNode.insertBefore(newLink, aTag.nextSibling);

}

}

// Process all existing <a> tags

document.querySelectorAll('a').forEach(injectLink);

// Set up a MutationObserver to observe the DOM for added <a> tags

var observer = new MutationObserver(function (mutations) {

mutations.forEach(function (mutation) {

mutation.addedNodes.forEach(function (node) {

// Check if the added node is an <a> tag

if (node.nodeType === 1 && node.tagName === 'A') {

injectLink(node);

}

});

});

});

// Start observing the document body for added nodes

observer.observe(document.body, {

childList: true,

subtree: true,

});

}

// Function to handle SPA navigation

function handleNavigation() {

// Remove existing MutationObserver if needed

if (window.myMutationObserver) {

window.myMutationObserver.disconnect();

}

// Re-run the link injection function

handleLinkInjection();

}

// Run the function

handleLinkInjection();

let lastUrl = location.href;

setInterval(() => {

const currentUrl = location.href;

if (currentUrl !== lastUrl) {

lastUrl = currentUrl;

handleNavigation();

}

}, 1000);

When the user clicks on the button, this script passes a message to the extension’s background service worker, where we’re now using a template string to dynamically set the base URL to the link we got from Github like this:

// background-service-worker.js

chrome.runtime.onMessage.addListener((request) => {

switch (request.type) {

case 'SET_ACTIVATION_STATUS': {

const { status, remoteUrl } = request.value;

if (status) {

chrome.declarativeNetRequest.updateDynamicRules({

removeRuleIds: [PROXY_ID],

addRules: [

{

id: PROXY_ID,

priority: 1,

action: {

type: 'redirect',

redirect: {

regexSubstitution: `${remoteUrl}/\\1`,

},

},

condition: {

regexFilter: "https://helpscoutblog.cloudfront.net/frontend/(.*)",

},

},

],

});

}

}

}

});We uploaded the extension to a private Chrome store for our organization and now, with no local install required and one click of a button in a PR, anyone on the team can test in production. When fine-tuning a PR with a designer or PM or QA tester, they can provide notes, and I can implement them, push up a commit, and then tell them to refresh their browser and see the changes immediately.

You do need a local environment

The title of this post is a bit facetious — your app still needs a local environment, for a variety of reasons! One is, you know, your backend developers (we love you, backend developers!)! Another is that this particular approach limits your ability to test cross-browser before production — in theory, the extension should also work in the Chromium-based Firefox and Edge, but at the time that we started development, the declarativeNetRequest API wasn’t fully supported in production for Firefox (though it is now!) and we haven’t yet explored it (Safari is unfortunately a non-starter), which means that we have to take more care to test those flows in a traditional local environment or in actual production.

But even if you need a local environment, there are a lot of other “yous” in your organization that have so much to offer to in-progress work. An approach like this can help you bring them into the development process earlier and more easily, which can only benefit your product and, by extension, your customers.